Corpus Description

Corpus Collection

From December 2016 to June 2017 we collected around 2,500 documents by asking members of the L3i laboratory, families and friends. After removing receipts which are not French, not anonymous, not readable, scribbled or too long, we captured 1969 images of receipts. To have the best workable images, we captured receipts with a fixed camera in a black room with floodlight. Receipts were placed under a glass plate to be flattened. Each photography contained several receipts, and we extracted and straightened each one of them to obtain one receipt per document. The resolution of these images is 300 dpi.

The size of images differs because of the nature of receipts: it depends both on the number of purchases and on the store that provides the receipt. The dataset contains very different receipts, from different stores or restaurants, with different fonts, sizes, pictures, barcodes, QR-codes, tables, etc. There is a lot of noise due to paper type, the print process and the state of the receipt, as they are often crumpled in pockets or wallets and generally handled with little care. Noises can be folds, dirts, rips. Ink is sometimes erased, or badly printed. This is a very challenging dataset for document image analysis.

To extract text from images, we applied Abbyy Finereader 11’s Optical Character Recognition engine. Since image quality is not perfect, so are the OCR results. We automatically corrected the most frequent errors, such as “€” symbols at the end of lines or “G” characters (for “grammes”) after sequences of 2 or 3 digits. Then, we proposed an online participative platform to correct OCR results and get a sound ground truth. The crowdsourcing of the human correction is still in progress.

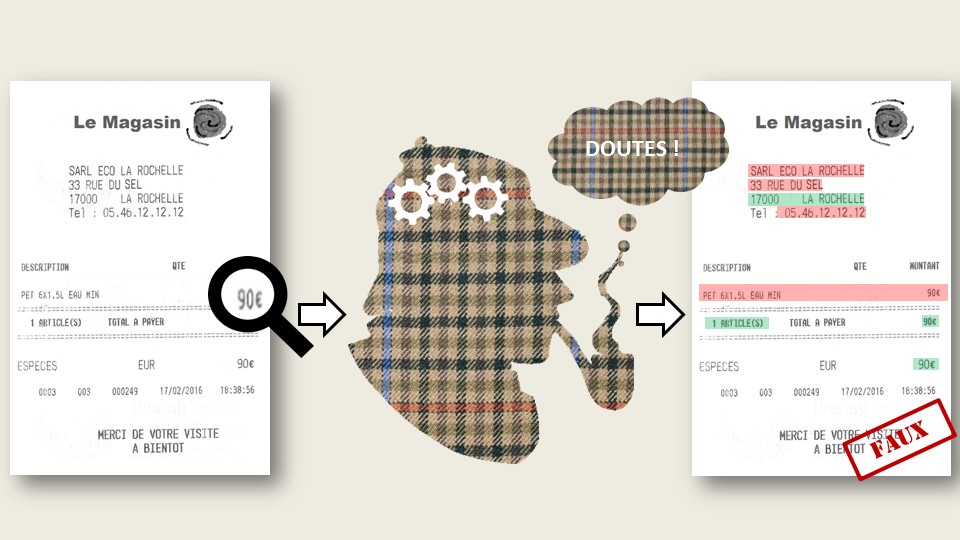

Alteration

This parallel dataset of images and texts is intended to undergo realistic forgeries. By “realistic forgeries”, we mean modifications that could happen in real life, as in the case of insurance fraud when fraudsters declare a more expensive price than true for objects that were damaged or stolen. We need to get realistic falsifications (price raises, changes of product titles, hotel address changes, etc.).

Synthesizes this step, by an automatic algorithm that randomly changes some characters for instance, would mechanically induce a bias that we absolutely wanted to avoid. From this statement, the only way to meet the previous criteria is to organize workshops with volunteers to become one-day fraudsters. To increase the diversity of quality and precision of forgeries, workshops are open to PhD students and post-docs from various labs like biologists, mathematicians, physicians, etc. To have a representative sample of real-like fraudsters, it is quite important not to restraint this job only to members of our computer science lab but to enlarge the scope of our project to a non-expert public, at least people who are not used to work with digital documents or image processing tools.

As we said, the aim of these workshops is to try to reproduce real forgeries in real conditions of fraudsters. All fraudulent acts are made using common and widespread material or tools, ie. a standard computer equipped with Windows 10® and several image editing softwares, at the user’s choice. For each receipts, image and text was modified silmutaneously. We obtained 230 altered receipts, containing several types of modification, on all receipt information.

Provided Input

Data to process is organised as a set of couples image and text files :

- An image file formatted in png, representing one receipt that can contain one or several forgeries

- A text file containing a textual transcription of the content of the receipt

Participants can use only images, only texts, or both images and texts.

For the first task, we provide for the learning phase a set of 600 documents, containing 5% of altered documents.

An XML file shows the name of the documents and whether they are genuine or fraudulent.

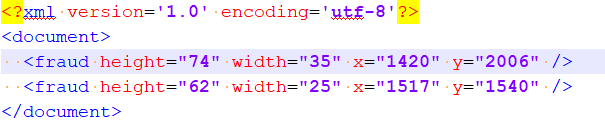

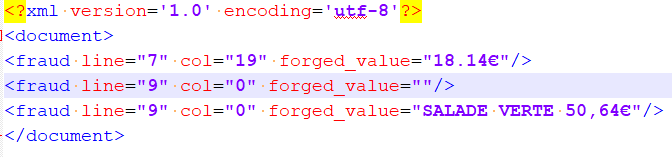

For the second task, we provide 100 altered document (images and texts), with 2 XML files defining ground truth:

- For images, XML file contains the coordinates of each modification, as follows: x and y are horizontal and vertical coordinates of the top-left point of the rectangle bounding box that have height and width. All measurements are in pixels.

- For texts, XML file contains the tokens, delimited by spaces in the text file, that are forged and the line and the column where they are located. If the whole line is modified (or append), the forged value contains the complete line. If the forged value is empty, it means that information has been deleted.

Warning: the line numbers start at 1 and the columns start at 0.

Expected output

Task 1: We expect from candidates to produce an unique XML file, similar to the Groundtruth XML file provided, specifying for each document whether it is altered (1) or not (0).

Task 2: We expect from candidates to produce an XML file for each document, following the Groundtruth format, that allow to describe if the document has been modified or not and precise the localisation of the forgery (bounding box or line).

Evaluation

With regard to the texts, the evaluation script produces a CSV file with, for each receipt, its name, and 3 measures of Jaccard index corresponding to 3 different sets:

– set of the lines covered by your localization results,

– set of the lines and column covered by your localization results,

– set of the lines, column and length of token covered by your localization results.

This choice is due to the complexity to localize a fake information in a line, and leave the possibility to have a less severe metric.

The training corpus, the test corpus and the evaluation scripts were deposited on a server whose FTP link was sent to all registered participants.